The illusory promise of the Aligned Rank Transform

A systematic study of rank transformations

This paper is under review on the experimental track of the Journal of Visualization and Interaction. See the reviewing process.

1 Introduction

In Human-Computer Interaction (HCI) and many areas of the behavioral sciences, researchers frequently collect data through user studies. Such data are often messy, involve small sample sizes, and violate common statistical assumptions, such as the normality assumption. To address these challenges, researchers commonly rely on nonparametric tests, which require fewer assumptions about the underlying data. However, while nonparametric tests for simple one-factorial designs are well-established, researchers face challenges in selecting appropriate methods when dealing with multifactorial designs that require testing for both main effects and interactions.

The Aligned Rank Transform or ART (Higgins, Blair, and Tashtoush 1990; Salter and Fawcett 1993; Wobbrock et al. 2011) was introduced to address this problem by bridging the gap between nonparametric tests and ANOVA. Its popularity in HCI research has surged, in part due to the ARTool toolkit (Wobbrock et al. 2011; Kay et al. 2021), which makes the method easy to apply. Oppenlaender and Hosio (2025) identify the paper by Wobbrock et al. (2011) as a major milestone in HCI research, ranking it third in terms of citations in the ACM CHI Proceedings (1981-2024), behind Braun and Clark’s (2006) article on thematic analysis and Hart and Staveland’s (1988) work on the NASA-TXL index.

However, given their substantial impact on research outcomes in our community, these widely adopted methods deserve closer scrutiny. As the first author previously argued (Tsandilas 2018), the HCI community lacks sufficiently expertise to critically assess the validity of statistical techniques. Our findings suggest that the widespread use of ART largely stems from limited awareness of its underlying assumptions. Many researchers treat ART as a general-purpose nonparametric alternative when ANOVA’s assumptions are violated, overlooking the fact that ART’s alignment mechanism imposes even stricter assumptions — including linear relationships between the predictors and responses, equal variances, and continuous data.

Early Monte Carlo experiments in the 1990s (Salter and Fawcett 1993; Mansouri and Chang 1995) and more recent studies (Elkin et al. 2021) suggested that ART is a robust alternative to ANOVA when normality assumptions are violated. These results have contributed to ART’s reputation as a well-established method. However, other research (Lüpsen 2017, 2018) raised concerns about the robustness of the method, demonstrating that ART fails to control the Type I error rate in many scenarios, such as when data are ordinal or are drawn from skewed distributions. Unfortunately, these warnings have received little attention, and many authors continue to rely on Wobbrock et al.’s (2011) assertion that “The ART is for use in circumstances similar to the parametric ANOVA, except that the response variable may be continuous or ordinal, and is not required to be normally distributed.”

This paper aims to clarify the severity of these issues and understand the potential risks of the method. We present the results of a series of Monte Carlo experiments in which we simulate a broad spectrum of distributions, both discrete and continuous. Throughout the analysis, we pay particular attention to how the definition of the null hypothesis affects the interpretation of results. To improve clarity and reproducibility, we decompose the study into multiple focused experiments, each targeting a specific factor (e.g., sample size, experimental design, standard-deviation ratio) or outcome measure (Type I error rate, statistical power, and the precision of effect size estimates).

Our findings corroborate Lüpsen’s alarming conclusions. We provide overwhelming evidence that ART confounds effects and raises Type I error rates at very high levels across a wide range of non-normal distributions, including skewed, binomial, and ordinal distributions, as well as distributions with unequal variances. These issues persist for both main and interaction effects. Our results further show that simpler rank transformation methods outperform ART, while parametric ANOVA generally poses fewer risks than ART when distributions deviate from normal. Based on these results, we conclude that ART is not a viable analysis method and should be abandoned. We offer recommendations for alternative analysis methods, while we also raise warnings about the analysis and interpretation of interaction effects.

Illustrative example

We will begin with an illustrative example to demonstrate how the aligned rank transform can lead to an increase in false positives and a significant inflation of observed effects. This example will also serve as a brief introduction to the key concepts and methods employed throughout the paper.

Suppose a team of HCI researchers conduct an experiment to compare the performance of three user interface techniques (A, B, and C) that help users complete image editing tasks of four different difficulty levels. The experiment follows a fully balanced \(4 \times 3\) within-subject factorial design, where each participant (N = 12) performs 12 tasks in a unique order. The researchers measure the time that it takes participants to complete each task. In addition, the participants rate their perceived efficiency completing each task, using an ordinal scale with five levels: (1) inefficient, (2) somewhat inefficient, (3) neutral, (4) somewhat efficient, and (5) efficient.

The following table presents the experimental results:

Example dataset: Time (in minutes) spent by 12 participants and their perceived efficiency (ordinal scale) for four difficulty levels and three user interface techniques. Scroll down for full results.

| Participant | Difficulty | Technique | Time | Perceived_Efficiency |

|---|---|---|---|---|

| P01 | Level1 | A | 0.20 | efficient |

| P01 | Level1 | B | 0.17 | somewhat efficient |

| P01 | Level1 | C | 0.14 | neutral |

| P01 | Level2 | A | 0.45 | efficient |

| P01 | Level2 | B | 0.50 | efficient |

| P01 | Level2 | C | 0.27 | neutral |

| P01 | Level3 | A | 0.64 | efficient |

| P01 | Level3 | B | 0.63 | efficient |

| P01 | Level3 | C | 0.83 | somewhat efficient |

| P01 | Level4 | A | 0.75 | efficient |

| P01 | Level4 | B | 1.35 | neutral |

| P01 | Level4 | C | 1.25 | somewhat efficient |

| P02 | Level1 | A | 0.71 | efficient |

| P02 | Level1 | B | 0.47 | efficient |

| P02 | Level1 | C | 0.59 | efficient |

| P02 | Level2 | A | 1.82 | efficient |

| P02 | Level2 | B | 0.87 | efficient |

| P02 | Level2 | C | 1.29 | efficient |

| P02 | Level3 | A | 1.35 | efficient |

| P02 | Level3 | B | 4.44 | efficient |

| P02 | Level3 | C | 1.92 | efficient |

| P02 | Level4 | A | 3.22 | efficient |

| P02 | Level4 | B | 9.62 | efficient |

| P02 | Level4 | C | 5.33 | efficient |

| P03 | Level1 | A | 0.55 | efficient |

| P03 | Level1 | B | 0.21 | efficient |

| P03 | Level1 | C | 0.43 | efficient |

| P03 | Level2 | A | 0.88 | efficient |

| P03 | Level2 | B | 1.07 | efficient |

| P03 | Level2 | C | 1.36 | efficient |

| P03 | Level3 | A | 0.85 | efficient |

| P03 | Level3 | B | 0.66 | efficient |

| P03 | Level3 | C | 2.19 | efficient |

| P03 | Level4 | A | 3.21 | efficient |

| P03 | Level4 | B | 3.44 | efficient |

| P03 | Level4 | C | 2.07 | efficient |

| P04 | Level1 | A | 0.54 | efficient |

| P04 | Level1 | B | 0.41 | efficient |

| P04 | Level1 | C | 0.52 | neutral |

| P04 | Level2 | A | 1.02 | somewhat efficient |

| P04 | Level2 | B | 0.61 | efficient |

| P04 | Level2 | C | 0.80 | efficient |

| P04 | Level3 | A | 2.19 | neutral |

| P04 | Level3 | B | 1.75 | efficient |

| P04 | Level3 | C | 2.36 | efficient |

| P04 | Level4 | A | 9.82 | efficient |

| P04 | Level4 | B | 4.32 | efficient |

| P04 | Level4 | C | 3.58 | efficient |

| P05 | Level1 | A | 0.79 | efficient |

| P05 | Level1 | B | 0.78 | efficient |

| P05 | Level1 | C | 0.45 | neutral |

| P05 | Level2 | A | 2.41 | efficient |

| P05 | Level2 | B | 0.99 | efficient |

| P05 | Level2 | C | 1.80 | efficient |

| P05 | Level3 | A | 2.70 | efficient |

| P05 | Level3 | B | 2.85 | efficient |

| P05 | Level3 | C | 2.25 | efficient |

| P05 | Level4 | A | 3.13 | efficient |

| P05 | Level4 | B | 5.38 | somewhat efficient |

| P05 | Level4 | C | 6.24 | efficient |

| P06 | Level1 | A | 0.64 | efficient |

| P06 | Level1 | B | 0.23 | efficient |

| P06 | Level1 | C | 0.34 | efficient |

| P06 | Level2 | A | 0.90 | efficient |

| P06 | Level2 | B | 0.93 | efficient |

| P06 | Level2 | C | 0.45 | efficient |

| P06 | Level3 | A | 1.32 | efficient |

| P06 | Level3 | B | 1.34 | efficient |

| P06 | Level3 | C | 1.80 | efficient |

| P06 | Level4 | A | 5.47 | efficient |

| P06 | Level4 | B | 4.64 | efficient |

| P06 | Level4 | C | 2.50 | efficient |

| P07 | Level1 | A | 0.17 | efficient |

| P07 | Level1 | B | 0.17 | efficient |

| P07 | Level1 | C | 0.16 | efficient |

| P07 | Level2 | A | 0.24 | efficient |

| P07 | Level2 | B | 0.30 | neutral |

| P07 | Level2 | C | 0.27 | efficient |

| P07 | Level3 | A | 0.72 | inefficient |

| P07 | Level3 | B | 1.30 | efficient |

| P07 | Level3 | C | 0.36 | efficient |

| P07 | Level4 | A | 1.06 | neutral |

| P07 | Level4 | B | 1.01 | efficient |

| P07 | Level4 | C | 0.55 | efficient |

| P08 | Level1 | A | 0.36 | efficient |

| P08 | Level1 | B | 0.38 | efficient |

| P08 | Level1 | C | 0.22 | efficient |

| P08 | Level2 | A | 0.69 | efficient |

| P08 | Level2 | B | 1.02 | efficient |

| P08 | Level2 | C | 0.89 | neutral |

| P08 | Level3 | A | 0.99 | efficient |

| P08 | Level3 | B | 1.75 | efficient |

| P08 | Level3 | C | 1.15 | efficient |

| P08 | Level4 | A | 3.87 | efficient |

| P08 | Level4 | B | 4.63 | efficient |

| P08 | Level4 | C | 4.89 | efficient |

| P09 | Level1 | A | 0.33 | efficient |

| P09 | Level1 | B | 0.24 | efficient |

| P09 | Level1 | C | 0.21 | efficient |

| P09 | Level2 | A | 0.39 | efficient |

| P09 | Level2 | B | 1.01 | efficient |

| P09 | Level2 | C | 0.42 | efficient |

| P09 | Level3 | A | 0.79 | efficient |

| P09 | Level3 | B | 1.17 | efficient |

| P09 | Level3 | C | 1.86 | efficient |

| P09 | Level4 | A | 3.47 | neutral |

| P09 | Level4 | B | 2.86 | efficient |

| P09 | Level4 | C | 1.21 | efficient |

| P10 | Level1 | A | 0.18 | efficient |

| P10 | Level1 | B | 0.24 | efficient |

| P10 | Level1 | C | 0.59 | neutral |

| P10 | Level2 | A | 0.27 | efficient |

| P10 | Level2 | B | 0.77 | neutral |

| P10 | Level2 | C | 0.61 | efficient |

| P10 | Level3 | A | 1.57 | efficient |

| P10 | Level3 | B | 0.90 | efficient |

| P10 | Level3 | C | 1.43 | somewhat efficient |

| P10 | Level4 | A | 4.73 | efficient |

| P10 | Level4 | B | 3.71 | efficient |

| P10 | Level4 | C | 1.13 | efficient |

| P11 | Level1 | A | 0.15 | somewhat efficient |

| P11 | Level1 | B | 0.57 | somewhat efficient |

| P11 | Level1 | C | 0.38 | somewhat inefficient |

| P11 | Level2 | A | 1.43 | neutral |

| P11 | Level2 | B | 0.39 | efficient |

| P11 | Level2 | C | 0.79 | efficient |

| P11 | Level3 | A | 1.90 | efficient |

| P11 | Level3 | B | 2.14 | neutral |

| P11 | Level3 | C | 0.89 | somewhat inefficient |

| P11 | Level4 | A | 3.92 | neutral |

| P11 | Level4 | B | 7.19 | somewhat efficient |

| P11 | Level4 | C | 3.62 | inefficient |

| P12 | Level1 | A | 0.23 | efficient |

| P12 | Level1 | B | 0.41 | efficient |

| P12 | Level1 | C | 0.56 | efficient |

| P12 | Level2 | A | 1.09 | efficient |

| P12 | Level2 | B | 1.06 | efficient |

| P12 | Level2 | C | 0.98 | efficient |

| P12 | Level3 | A | 1.70 | efficient |

| P12 | Level3 | B | 1.39 | efficient |

| P12 | Level3 | C | 1.42 | efficient |

| P12 | Level4 | A | 3.09 | efficient |

| P12 | Level4 | B | 6.24 | efficient |

| P12 | Level4 | C | 1.14 | efficient |

The experiment is hypothetical but has similarities with real-world experiments, e.g., see the experiments of Fruchard et al. (2023).

Time performance. Time measurements have been randomly sampled from a population in which: (1) Difficulty has a large main effect; (2) Technique has no main effect; and (3) there is no interaction effect between the two factors. To generate time values, we drew samples from a log-normal distribution. The log-normal distribution is often a good fit for real-world measurements that are bounded by zero and have low means but large variance (Limpert, Stahel, and Abbt 2001). Task-completion times are good examples of such measurements (Sauro and Lewis 2010).

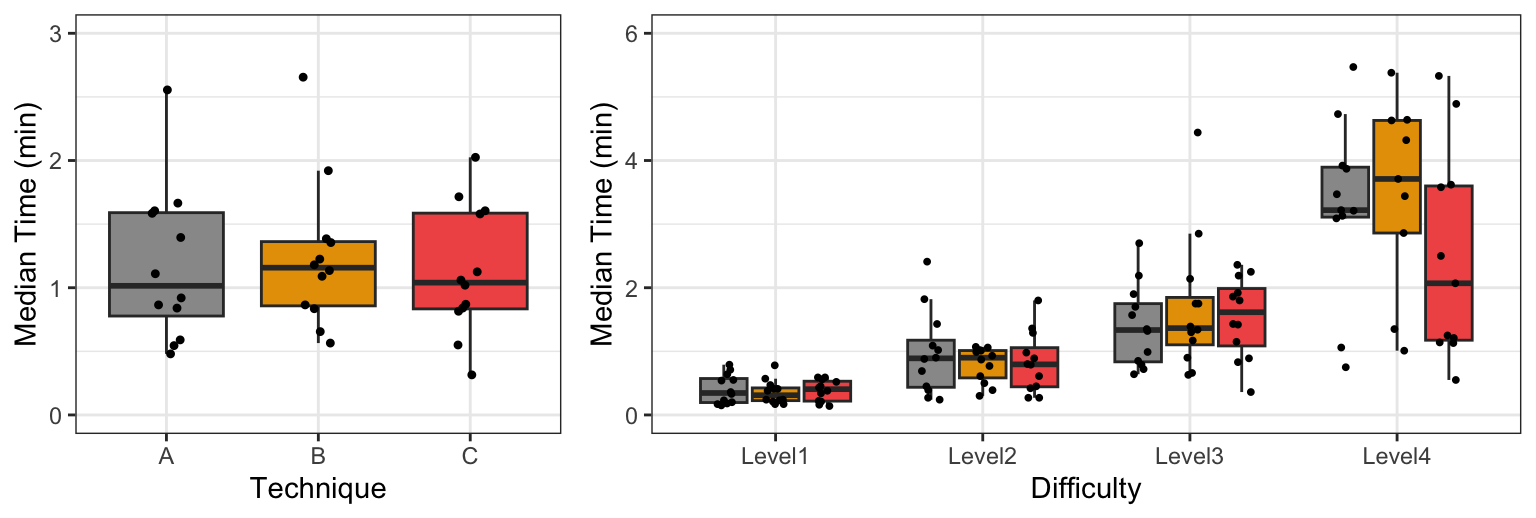

Figure 1 presents two boxplots that visually summarize the main effects observed through the experiment. We plot medians to account for the fact that distributions are skewed. We observe that differences in the overall time performance of the three techniques are not visually clear, although the overall median time is somewhat higher for Technique B. In contrast, time performance clearly deteriorates as task difficulty increases. We also observe that for the most difficult tasks (Level 4), the median time for Technique C is lower than the median time for Techniques A and B, so we may suspect that Difficulty interacts with Technique. However, since the spread of observed values is extremely large and the number of data points is small, such differences could result from random noise.

We opt for a multiverse analysis (Dragicevic et al. 2019) to analyze the data, where we conduct a repeated-measures ANOVA with four different data-transformation methods:

Log transformation (LOG). Data are transformed with the logarithmic function. For our data, this is the most appropriate method as we drew samples from a log-normal distribution.

Aligned rank transformation (ART). Data are transformed and analyzed with the ARTool (Wobbrock et al. 2011; Elkin et al. 2021).

Pure rank transformation (RNK). Data are transformed with the original rank transformation (Conover and Iman 1981), which does not perform any data alignment.

Inverse normal transformation (INT). The data are transformed by using their normal scores. This rank-based method is simple to implement and has been commonly used in some disciplines. However, it has also received criticism (Beasley, Erickson, and Allison 2009).

For comparison, we also report the results of the regular parametric ANOVA with no transformation (PAR). To simplify our analysis and like Elkin et al. (2021), we consider random intercepts but no random slopes. For example, we use the following R code to create the model for the log-transformed response:

m.log <- aov(log(Time) ~ Difficulty*Technique + Error(Participant), data = df)Alternatively, we can use a linear mixed-effects model, treating the participant identifier as a random effect:

m.log <- lmer(log(Time) ~ Difficulty*Technique + (1|Participant), data = df)The table below presents the p-values for the main effects of the two factors and their interaction:

| PAR | LOG | ART | RNK | INT | |

|---|---|---|---|---|---|

| Difficulty | \(1.8 \times 10^{-26}\) | \(8.1 \times 10^{-47}\) | \(9.0 \times 10^{-43}\) | \(4.3 \times 10^{-46}\) | \(4.4 \times 10^{-44}\) |

| Technique | \(.10\) | \(.18\) | \(.00062\) | \(.38\) | \(.17\) |

| Difficulty \(\times\) Technique | \(.056\) | \(.10\) | \(.0017\) | \(.24\) | \(.23\) |

The disparity in findings between ART and the three alternative transformation methods is striking. ART suggests that three is strong statistical evidence for all three effects. What adds to the intrigue is the fact that ART’s p-values for Technique and its interaction with Difficulty are orders of magnitude lower than the p-values obtained from all other methods. We will observe similar discrepancies if we conduct contrast tests with the ART procedure (Elkin et al. 2021), though we leave this as an exercise for the reader.

We also examine effect size measures, which are commonly reported in scientific papers. The table below presents results for partial \(\eta^2\), which describes the ratio of variance explained by a variable or an interaction:

| PAR | LOG | ART | RNK | INT | |

|---|---|---|---|---|---|

| Difficulty | \(.64\ [.55, 1.0]\) | \(.83\ [.79, 1.0]\) | \(.80\ [.76, 1.0]\) | \(.83\ [.79, 1.0]\) | \(.81\ [.77, 1.0]\) |

| Technique | \(.04\ [.00, 1.0]\) | \(.03\ [.00, 1.0]\) | \(.11\ [.03, 1.0]\) | \(.02\ [.00, 1.0]\) | \(.03\ [.00, 1.0]\) |

| Difficulty \(\times\) Technique | \(.10\ [.00, 1.0]\) | \(.08\ [.00, 1.0]\) | \(.16\ [.04, 1.0]\) | \(.06\ [.00, 1.0]\) | \(.06\ [.00, 1.0]\) |

We observe that ART exaggerates both the effect of Technique and its interaction with Difficulty. This example demonstrates a more general problem with ART’s alignment mechanism under long-tailed distributions. ART tends to confound effects: a strong effect on a single factor often leads the method to detect spurious effects on other factors or to identify non-existent interactions.

Perceived efficiency. The perceived efficiency ratings were randomly sampled from a population with no main effect on any of the two factors and no interaction effect. Figure 2 shows that participants’ ratings are concentrated at the higher end of the scale but with no clear trends.

For our analysis, we compare the results of PAR, ART, RNK, and INT, since LOG is not relevant for this type of data. We also report the results of the Friedman test (Friedman 1940) for each factor, where we first aggregate the data by taking the mean over the other factor. Results are as follows:

| PAR | ART | RNK | INT | Friedman Test | |

|---|---|---|---|---|---|

| Difficulty | \(.93\) | \(.0011\) | \(.86\) | \(.88\) | \(.83\) |

| Technique | \(.18\) | \(.00016\) | \(.32\) | \(.23\) | \(.24\) |

| Difficulty \(\times\) Technique | \(.27\) | \(.10\) | \(.33\) | \(.34\) |

ART’s p-values for the two main effects are surprisingly low, while no other method shows any substantial evidence for such effects. We also present the methods’ effect size estimates:

| PAR | ART | RNK | INT | |

|---|---|---|---|---|

| Difficulty | \(.004\ [.00, 1.0]\) | \(.12\ [.03, 1.0]\) | \(.006\ [.00, 1.0]\) | \(.005\ [.00, 1.0]\) |

| Technique | \(.03\ [.00, 1.0]\) | \(.13\ [.05, 1.0]\) | \(.02\ [.00, 1.0]\) | \(.02\ [.00, 1.0]\) |

| Difficulty \(\times\) Technique | \(.06\ [.00, 1.0]\) | \(.04\ [.00, 1.0]\) | \(.05\ [.00, 1.0]\) | \(.05\ [.00, 1.0]\) |

The discrepancies between ART’s estimates for the two main effects and those of the other methods are large. As we demonstrate in this paper, such discrepancies stem from ART’s inability to handle discrete distributions. The issue becomes more pronounced with larger sample sizes. Lüpsen (2017) previously warned researchers about this problem, but his warnings have largely been ignored.

Overview

The above example does not reflect a rare phenomenon. We will show that ART’s error inflation is systematic for a range of distributions that deviate from normality, both continuous and discrete.

The paper is structured as follows. Section 2 introduces nonparametric tests and rank transformations, and explains how ART is constructed. It also provides a summary of previous experimental results regarding the robustness of ART and other related rank-based procedures, along with a survey of recent studies using ART. Section 3 clarifies the method’s distributional assumptions. Section 4 investigates issues regarding the definition of the null hypothesis and the interpretation of main and interaction effects. Section 5 outlines our experimental methodology, while Section 6 presents our findings. Section 7 revisits results of previous studies employing ART and illustrates how its application can lead to erroneous conclusions. Section 8 offers recommendations for researchers. Finally, Section 9 concludes the paper.

In addition to the main sections of the paper, we provide an appendix (see Appendix I) with results from additional Monte Carlo experiments.

2 Background

We provide background on the history, scope, construction, and use of rank transformation methods, with a particular focus on ART. Additionally, we summarize the results of previous evaluation studies and position our own work within this context.

Nonparametric statistical tests

Classical ANOVA and linear regression are based on a linear model in which the error terms (residuals) are assumed to be independent, homoscedastic, and normally distributed. When sample sizes are sufficiently large, violations of the normality assumption become less problematic for inference on model coefficients because, under fairly general conditions, the sampling distributions of these coefficients are approximately normal (Central Limit Theorem). As a result, ANOVA is often reasonably robust to moderate departures from normality. However, this robustness weakens with small sample sizes, strong skewness, heavy tails, outliers, or heteroscedasticity, all of which can substantially affect Type I error rates and power.

The goal of nonparametric procedures is to mitigate these problems by making fewer and weaker assumptions about the underlying distributions. The original idea of replacing the data values by their ranks goes back to Spearman (1904). The key advantage of ranks is that they preserve the monotonic relationship of values while also allowing the derivation of an exact probability distribution. This probability distribution can then be used for statistical inference, such as calculating a p-value. The idea of using ranks was adopted by other researchers, leading to the development of various nonparametric tests commonly used today. Representative examples include the Mann-Whitney U test (Mann and Whitney 1947) and the Kruskal–Wallis test (Kruskal and Wallis 1952) for independent samples, and the Wilcoxon sign-rank test (Wilcoxon 1945) and the Friedman test (Friedman 1940) for paired samples and repeated measures.

Rank transformations

The scope of classical nonparametric tests is limited, as they only support simple experimental designs with a single independent variable. Rank transformations aim to address this limitation by bridging the gap between nonparametric statistics and ANOVA.

The rank transform. Conover (2012) provides a comprehensive introduction to the simple rank transform, which consists of sorting the raw observations and replacing them by their ranks. In the presence of ties, tied observations are assigned fractional ranks equal to their average order position in the ascending sequence of values. For example, the two \(0.3\) instances of the \(Y\) responses in Figure 3 (a), which are the smallest values in the dataset, receive a rank of \(1.5\) (see \(rank(Y)\)), while the next value (a \(0.4\)) in the sequence receives a rank of \(3\).

Conover and Iman (1981) showed that, for one-factor designs, applying ANOVA to these ranks yields tests that are asymptotically equivalent or good replacements of traditional nonparametric tests (see Section 4). However, a series of studies in the 80s raised caution flags on the use of the method, showing that it may confound main and interaction effects in two-factor and three-factor experimental designs. For an extensive review of these studies, we refer readers to Sawilowsky (1990).

The aligned rank transform. In response to these negative findings, researchers turned to aligned (or adjusted) rank transformations. Sawilowsky (1990) reviews several variants of aligned rank-based transformations (ART) and tests, while Higgins, Blair, and Tashtoush (1990) describe the specific ART method that we evaluate in this paper for two-factor designs. Wobbrock et al. (2011) later generalized the method to more than two factors, and more recently, Elkin et al. (2021) extended it to multifactor contrast testing.

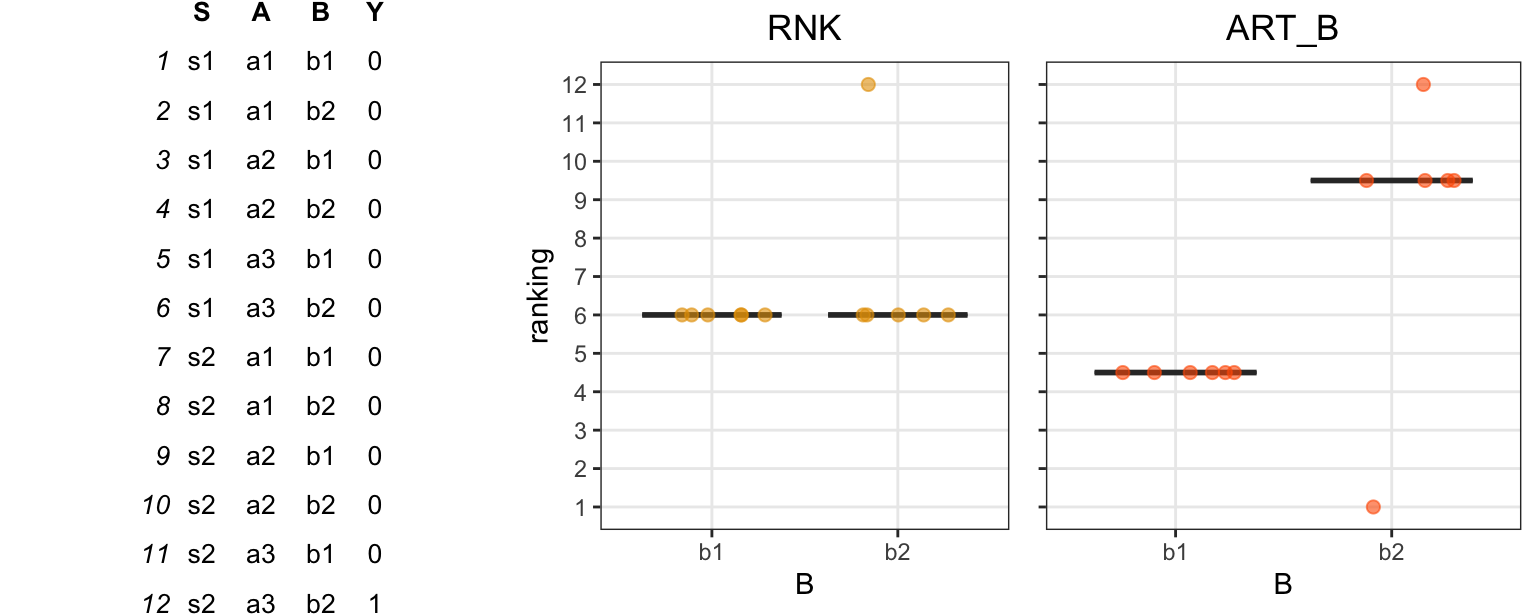

Figure 3 explains the general method for a design with two factors, \(A\) and \(B\). The key intuition behind the transform is that responses are ranked after they are aligned, such that effects of interest are separated from other effects. This implies that for each effect, either main or interaction, a separate ranking is produced. This is clearly illustrated in the example dataset shown in Figure 3 (a), where each aligned ranking (\(ART_A\), \(ART_B\), and \(ART_{AB}\)) is for testing a different effect (\(A\), \(B\), and \(A \times B\), respectively).

Figure 3 (b-c) details the calculation of the transformation, where we highlight the following terms: (i) residuals (in yellow) that represent the unexplained error (due to individual differences in our example); (ii) main effects (in green and pink) estimated from the observed means of the individual levels of the two factors; and (iii) interaction effect estimates (in blue). Observe that the estimates of the two main effects are subtracted from the interaction term. The objective of this approach is to eliminate the influence of main effects when estimating interaction effects.

Notice that even in this very small dataset (\(n = 2\)), the three ART rankings are distinct from one another and also differ significantly from the ranking produced by a simple rank transformation. Interestingly, identical responses (e.g., the two values of \(0.3\)) can be assigned very different ranks, while responses that differ substantially (e.g., \(0.3\) and \(3.0\)) may receive the same rank. More surprisingly, larger values can receive lower ranks — revealing that ART is a non-monotonic transformation. For instance, for subject \(s_1\) under condition \(b_1\), the responses are \(0.4\) and \(0.8\) for \(a_1\) and \(a_2\), yet their respective \(ART_A\) ranks are \(7.5\) and \(3.0\). In this case, ART significantly distorts the actual difference between the two groups, \(a_1\) and \(a_2\).

As we will show later, this behavior can lead to unstable results and misleading conclusions, and it represents a central flaw of the method. For example, running a repeated-measures ANOVA on these ART ranks yields a p-value of \(.044\) for the effect of \(A\). In contrast, using a repeated-measures ANOVA on the ranks of simple rank transformation produces a p-value of \(.46\), while applying a log-transformation — more appropriate for this type of data — yields a p-value of \(.85\).

This is not the only alignment technique discussed in the literature. Sawilowsky (1990) suggests that, at least for balanced designs, interactions could also be removed when aligning main effects, in the same way main effects are removed when aligning interactions. This approach is also taken by Leys and Schumann (2010), who derived a common ranking for both main effects after subtracting the interaction term. We do not evaluate these alternative alignment methods in this paper, as they are, to the best of our knowledge, not commonly used in practice.

The inverse normal transform. A third transformation method we evaluate is the rank-based inverse normal transformation (INT). INT has been in use for over 70 years (Waerden 1952) and exists in several variations (Beasley, Erickson, and Allison 2009; Solomon and Sawilowsky 2009). Its general formulation is as follows:

\[ INT(Y) = \Phi^{-1}(\frac{rank(Y) - c}{N - 2c + 1}) \tag{1}\]

where \(N\) is the total number of observations and \(\Phi^{-1}\) is the standard normal quantile function, which transforms a uniform distribution of ranks into a normal distribution. Different authors have used a different parameter \(c\). In our experiments, we use the Rankit formulation (Bliss, Greenwood, and White 1956), where \(c = 0.5\), since past simulation studies (Solomon and Sawilowsky 2009) have shown that it is more accurate than alternative formulations. However, as Beasley, Erickson, and Allison (2009) report, the choice of \(c\) is of minor importance. For our experiments, we implement the INT method in R as follows:

INT <- function(x){

qnorm((rank(x) - 0.5)/length(x))

}Figure 3 (a) shows how this function transforms the responses for our example dataset.

Other non-parametric rank-based methods. Several other rank-based statistical tests handle interactions, with the ANOVA-type statistic (ATS) (Brunner and Puri 2001) being the most representative one. Kaptein, Nass, and Markopoulos (2010) introduced this method to the HCI community, advocating its use for analyzing Likert-type data as an alternative to parametric ANOVA. Beyond ATS, Lüpsen (2017, 2018, 2023) examined several other multifactorial nonparametric methods. In particular, the author evaluated the hybrid ART+INT technique proposed by Mansouri and Chang (1995), which applies INT on the ranks of ART. He also tested multifactorial generalizations of the van der Waerden test (Waerden 1952) and the Kruskal-Wallis and Friedman tests (Kruskal and Wallis 1952; Friedman 1940). The former is based on INT, but instead of using F-tests on the transformed values as part of ANOVA, it computes \(\chi^2\) ratios over sums of squares. These two methods are not widely available, but R implementations can be obtained from Lüpsen’s website (Lüpsen 2021).

Experimental evaluations

Previous studies have evaluated ART and related procedures using various Monte Carlo simulations, often producing conflicting results and conclusions.

Results in support of ART. A number of experiments conducted during the 80s and 90s suggested that ART is robust for testing interaction effects. Noteworthy instances include studies, such as those by Salter and Fawcett (1993), which compared the method to parametric ANOVA. The authors found that ART remains robust even in the presence of outliers or specific non-normal distributions, such as the logistic, exponential, and double exponential distributions. Their findings indicated only a marginal increase in error rates (ranging from 6.0% to 6.3% instead of the expected 5%) when applied to the exponential distribution. Furthermore, ART demonstrated superior statistical power compared to parametric ANOVA. Mansouri and Chang (1995) evaluated ART under a different set of non-normal distributions (normal, uniform, log-normal, exponential, double exponential, and Cauchy) in the presence of increasing main effects. Except for the Cauchy distribution, ART maintained Type I error rates close to nominal levels across all scenarios, irrespective of the magnitude of main effects. In contrast, the error rates of the rank transformation reached very high levels (up to \(100\%\)) as the magnitude of main effects increased, even under the normal distribution. ART only failed under the Cauchy distribution, which is well-known to be pathological.

More recently, Elkin et al. (2021) compared ART to parametric t-tests for testing multifactor contrasts under six distributions: normal, log-normal, exponential, double exponential, Cauchy, and Student’s t-distribution (\(\nu=3\)). Their results confirmed that ART keeps Type I error rates close to nominal levels across all distributions, except for the Cauchy distribution. In addition, they found that ART exhibits a higher power than the t-test.

While most evaluation studies have focused on continuous distributions, Payton et al. (2006) have also studied how various transformations (rank, ART, log-transform, and squared-root transform) perform under the Poisson distribution, focusing again on interaction effects when main effects were present. The authors found that ART and parametric ANOVA (no transformation) performed best, keeping Type I error rates close to nominal levels. All other transformations inflated error rates.

Warnings. While the above results indicate that ART is a robust method, other studies have identified some serious issues. The second author of this paper has observed that, in certain cases, ART seems to detect spurious effects that alternative methods fail to identify (Casiez 2022). Such informal observations, conducted with both simulated and real datasets, motivated us to delve deeper into the existing literature.

Carletti and Claustriaux (2005) report that “aligned rank transform methods are more affected by unequal variances than analysis of variance especially when sample sizes are large.” Years later, Lüpsen (2018) conducted a series of Monte Carlo experiments, comparing a range of rank-based transformations, including the rank transformation, ART, INT, a combination of ART and INT (ART+INT), and ATS. His experiments focused on a \(2 \times 4\) balanced between-subject design and a \(4 \times 5\) severely unbalanced design and tested normal, uniform, discrete uniform (integer responses from 1 to 5), log-normal, and exponential distributions, with equal or unequal variances. Furthermore, they tested both interaction and main effects when the magnitude of other effects increased. The results revealed that ART inflates error rates beyond acceptable levels in several configurations: right-skewed distributions (log-normal and exponential), discrete responses, unequal variances, and unbalanced designs. Lüpsen (2018) also found that using INT in combination with ART (ART+INT) is preferable to the pure ART technique. However, as the method still severely inflated error rates in many settings, Lüpsen (2018) concluded that both ART and ART+INT are “not recommendable.”

Another notable finding by Lüpsen (2018) was that the simple rank transformation “appeared not as bad as it is often described” (Lüpsen 2018), outperforming ART in many scenarios, such as discrete and skewed distributions, or distributions with unequal variances. These results are in full contradiction with the findings of Mansouri and Chang (1995).

The same author conducted an additional series of experiments (Lüpsen 2017), focusing on two discrete distributions (uniform and exponential) with a varying number of discrete levels: 2, 4, and 7 levels with integer values for the uniform distribution, and 5, 10, and 18 levels with integer values for the exponential distribution. Again, the Type I error rates of both ART and ART+INT reached very high levels, but error rates were especially pronounced when the number of discrete levels became small and the sample size increased. Given these results, the author’s conclusion was the following:

“the ART as well as the ART+INT cannot be applied to Likert and similar metric or ordinal scaled variables, e.g. frequencies like the number of children in a family or the number of goals, or ordinal scales with ranges from 1 to 5” (Lüpsen 2017).

Results on other rank-based methods. We are also interested in understanding how ART compares with INT, which is frequently used in some research domains, such as genetics research (Beasley, Erickson, and Allison 2009). Beasley, Erickson, and Allison (2009) conducted an extensive evaluation of the method and reached the conclusion that “INTs do not necessarily maintain proper control over Type 1 error rates relative to the use of untransformed data unless they are coupled with permutation testing.” Lüpsen (2018) included INT in his evaluation and found that in most cases, it maintained better control of Type I error rates compared to ART and the pure rank transformation, while also presenting a higher statistical power. However, he also identified several cases where INT failed to sufficiently control for Type I errors, such as design configurations with unequal variances in unbalanced designs or skewed distributions, and when testing interactions in the presence of non-null main effects.

In addition to INT, Lüpsen (2018) evaluated the ATS method but found it to suffer from low power while presenting similar challenges as the rank transformation with regard to Type I error rates. Amongst all evaluated methods, the author identified the generalized van der Waerden test as the method that provided the best overall control of Type I error rates. More recently, Lüpsen (2023) conducted a new series of experiments testing rank-based nonparametric methods on split-plot designs with two factors. While the author reported on various tradeoffs of the methods, he concluded that overall, the generalized van der Waerden test and the generalized Kruskal-Wallis and Friedman tests were the best performing methods.

The use of ART in experimental research

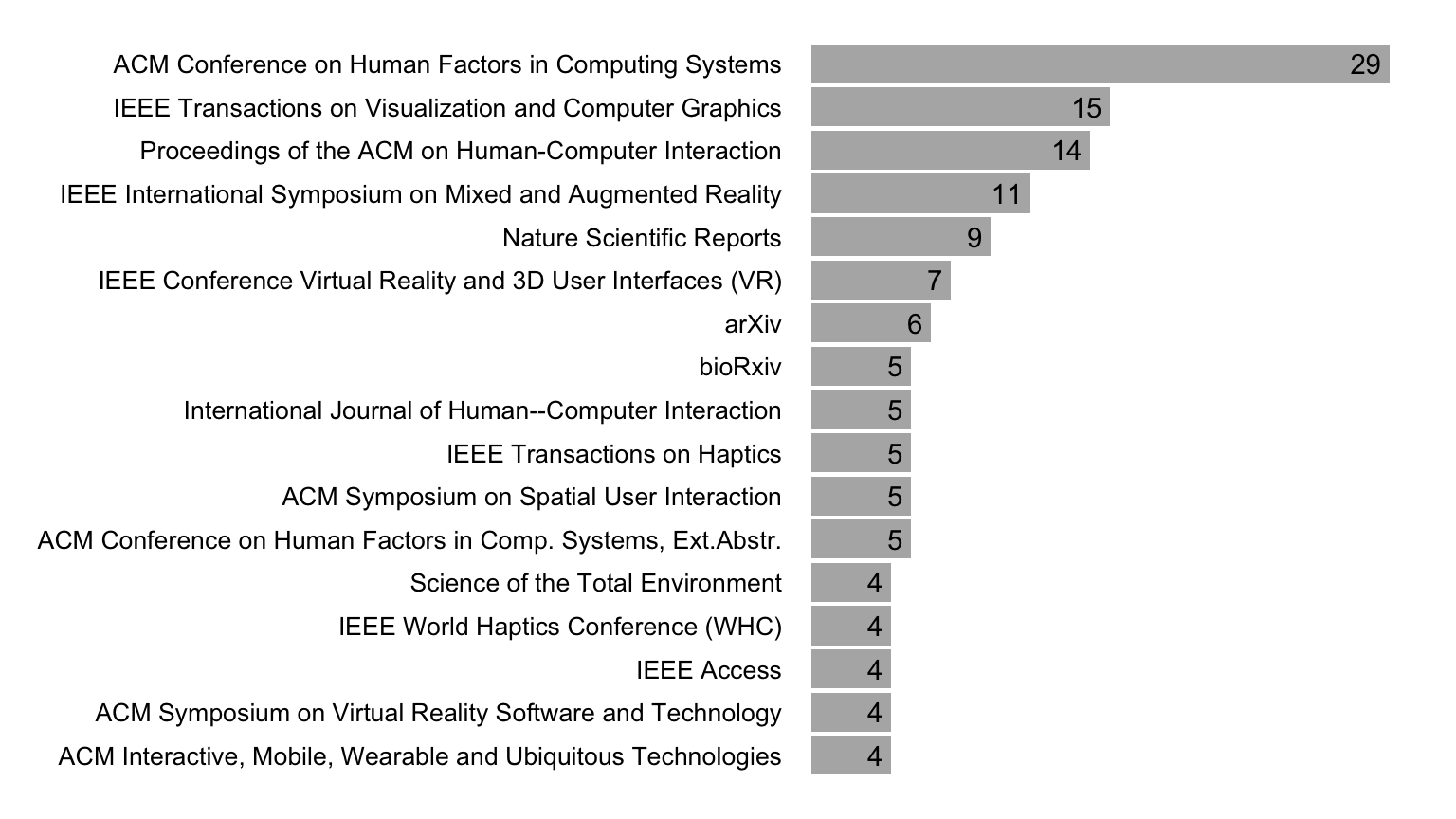

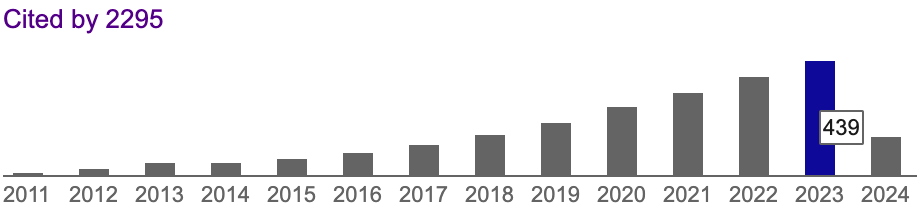

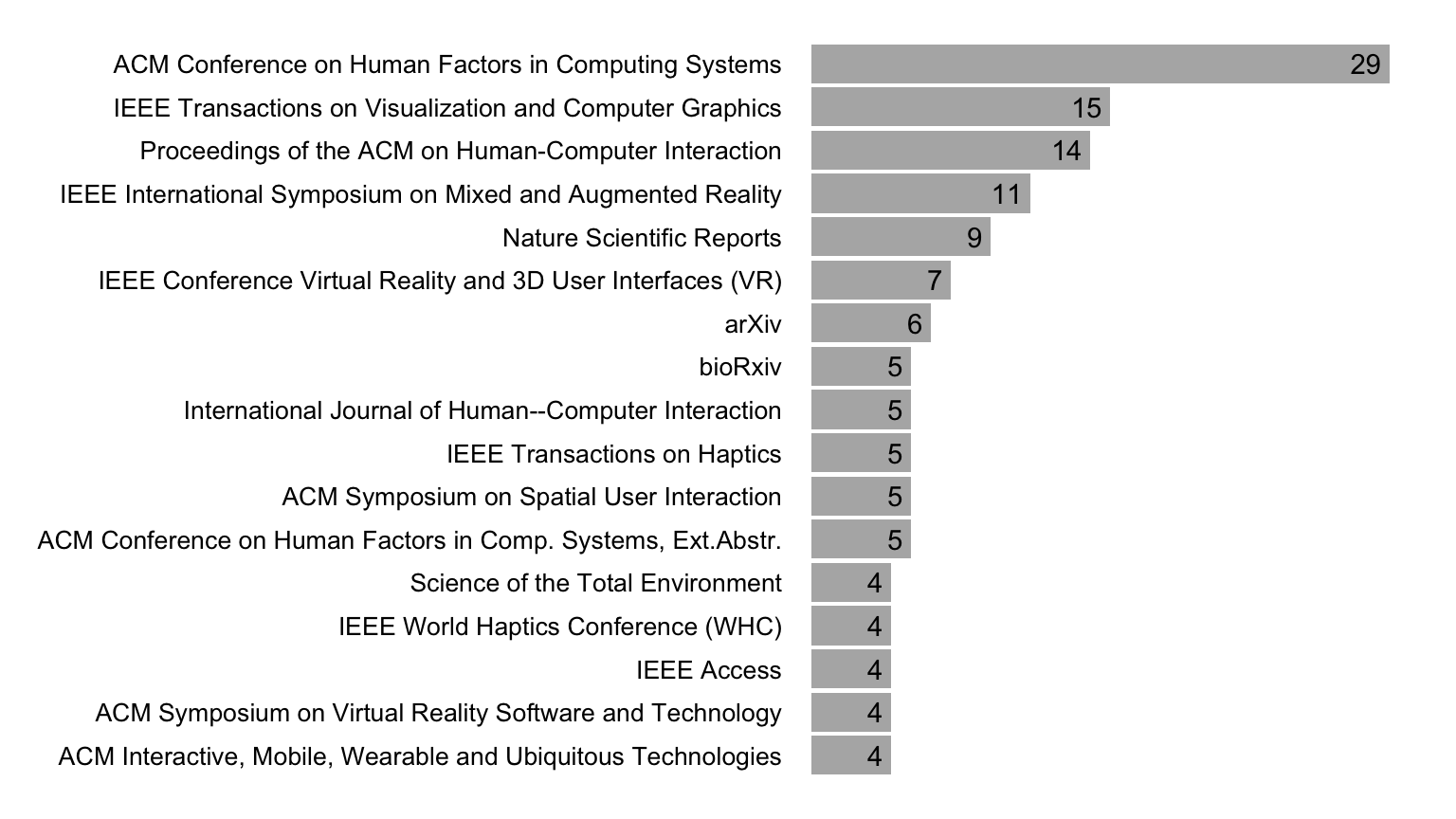

To get a sense of how frequently ART is used in experimental research, we examined the citations of ARTool (Wobbrock et al. 2011) indexed by Google Scholar. As shown in Figure 4), the rate of citations to the original CHI 2011 paper introducing the method to the HCI community is steadily increasing, with over 400 citations in 2023 alone.

Figure 5 presents the most frequently citing venues for 2023. We observe that the HCI, Augmented Reality (AR), and Virtual Reality (VR) venues dominate these citations. However, we found that ARTool is used in other scientific disciplines. For example, among the papers published in 2023, we counted nine Nature Scientific Reports and a total of 25 articles appearing in journals with the words “neuro” or “brain” in their title, including the Journal of Neuropsychology (three citations), the Journal of Neuroscience (two citations), the Journal of Cognitive Neuroscience (two citations), Neuropsychologia (two citations), Nature Neuroscience, Neuropharmacology, Brain, and NeuroImage.

To gain insights into the prevalent experimental designs using ART, we examined a subset of citing papers comprising the 39 most recent English publications as of November 2023. Among these, 25 report using a within-subject design, three papers report using a between-subject design, while 11 papers report using a mixed design that involved both between- and within-subject factors. The number of factors ranges from one to five, with two factors representing approximately 64% of the experimental designs. Participant counts in these studies vary between 12 and 468, with a median of 24 participants. Within-cell sample sizes (n) range from 8 to 48, with a median of 20.

Furthermore, we explored the types of data analyzed using ART. Among the 39 papers, 21 use ART for analyzing ordinal data, often in conjunction with other data types. Ordinal data include responses to Likert scales or other scales of subjective assessment, such as the NASA Task Load Index (NASA TXL) (Hart and Staveland 1988). Furthermore, several authors apply ART to individual ordinal items, including Likert items with five levels (two papers), seven levels (seven papers), and 11 levels (three papers). Other types of data for which the method is used include task-completion or response times, counts, and measures of accuracy, such as target hit rates or recall scores. A common rationale for employing ART for such measures is the detection of departures from normality assumptions, often identified through tests like the Shapiro-Wilk test. Interestingly, several authors use ART only for ratio data, opting for nonparametric tests such as the Friedman test when analyzing ordinal data.

Out of the 39 papers examined, 38 identify at least one statistically significant main effect using ART. Additionally, 30 papers conduct tests for interactions, with 24 of them reporting at least one statistically significant interaction effect. Only five papers have publicly available data. We revisit the results of three of these papers in Section 7.

Positioning

We observe that ART is frequently used for the analysis of experimental results. Interestingly, the cautions raised by Lüpsen (2017, 2018) have been widely overlooked, and ART is commonly applied to datasets, including Likert data with five or seven levels, where the method has been identified as particularly problematic. Given the contradictory findings in the existing literature, researchers grapple with significant dilemmas regarding which past recommendations to rely on.

Our goal is to verify Lüpsen’s findings by employing an alternative set of experimental configurations and a distinctly different methodology. While our simulation strategy is similar to that of Elkin et al. (2021), we investigate a larger range of experimental settings and critical scenarios that the authors did not consider, particularly the influence of non-null effects and discrete data.

With a focus on clarity, we chose to exclusively examine the three rank-based transformations presented earlier: the pure rank transformation (RNK), ART, and INT. Although we do not elaborate on the performance of ATS in the main paper, additional experimental results are available in Appendix I. These findings indicate that its performance is comparable to the rank transformation but seems to be inferior to the simpler and more versatile INT. Appendix I features additional results regarding the performance of the generalized van der Waerden test, as well as the generalized Kruskal-Wallis and Friedman tests. Our findings do not support the conclusions of Lüpsen (2018, 2023). They show that these methods suffer from severe losses of statistical power when more than one effect is present, without offering any clear advantages over RNK or INT.

Finally, we limit our primary investigation to balanced experimental designs. The rationale behind this choice is that we have not encountered any prior claims about ART’s suitability for unbalanced data, and the ARTool (Kay et al. 2021) issues a warning in such situations. However, Appendix I presents additional results on experimental designs with missing data, which confirm that in such situations, ART’s accuracy deteriorates further.

3 Revisiting ART’s distributional assumptions

ART is often regarded as a nonparametric method, but its alignment mechanism relies on a set of strict assumptions. For a two-way experimental design with independent observation, Higgins, Blair, and Tashtoush (1990) assume the following model for the responses:

\[ Y_{ijk} = \mu + \alpha_i + \beta_j + (\alpha \beta)_{ij} + \varepsilon_{ijk} \tag{2}\]

where \(\mu\) is the grand mean, \(\alpha_i\) is the main effect of the first factor for level \(i\), \(\beta_j\) is the main effect of the second factor for level \(j\), \((\alpha \beta)_{ij}\) is the interaction effect, and \(\varepsilon_{ijk}\) is the residual error term, assumed to have a common variance. The index \(k = 1, ..., n\) denotes the subject number.

This formulation makes several key assumptions:

- A linear relationship between effects and responses;

- A common variance among all experimental conditions; and

- Continuous responses.

The proof by Mansouri and Chang (1995) assessing the limiting distributional properties of ART for interaction effects is based on these same assumptions.

It is worth noting that ANOVA relies on similar assumptions, with the added requirement that errors be normally distributed. One might therefore argue that ART imposes fewer assumptions than ANOVA. Additionally, since ART is rank-based, it may appear reasonable to expect some degree of robustness to violations of the linear model.

Unfortunately, this line of reasoning is not correct. ART’s assumptions pertain specifically to its alignment mechanism, which is unique to this method. The rank transformation is applied only after alignment, and the authors of ART have not provided any theoretical or empirical justification for their alignment method under nonlinear models, non-equal variances, or discrete data. We explain the severity of these assumptions in more detail.

Violations of the linearity assumption

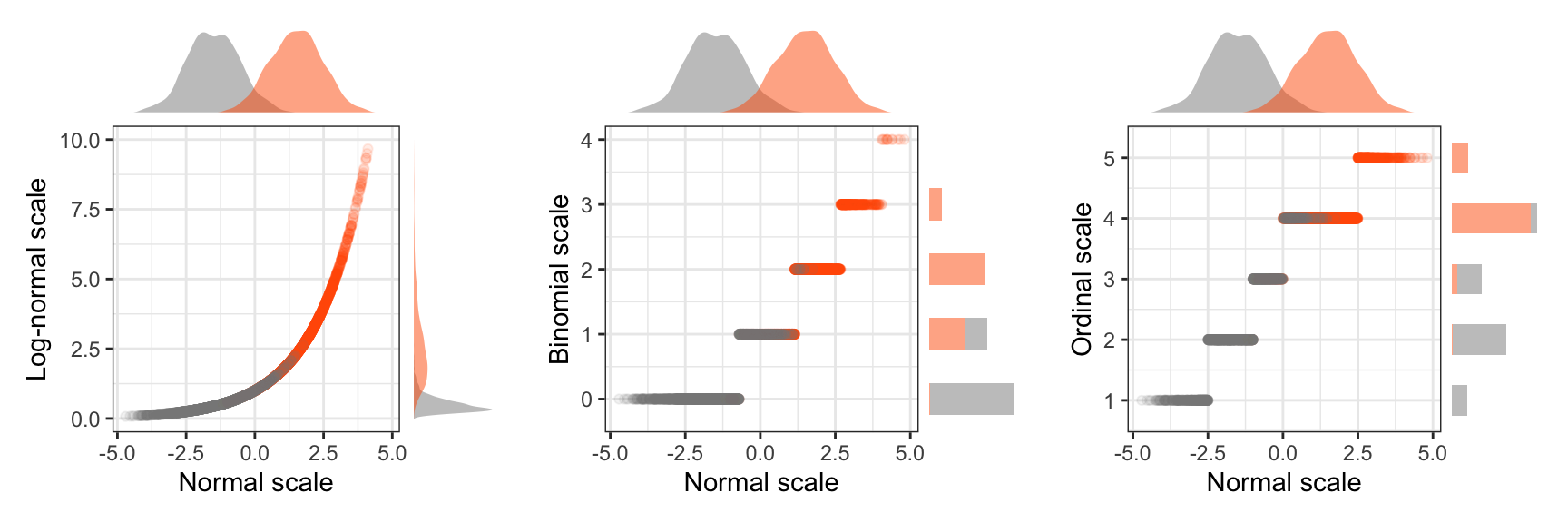

Suppose we studied image-editing tasks (as in our illustrative example), measuring the time participants needed to complete them for two difficulty levels: easy and hard. If we assume that observed times follow log-normal distributions, the model of Higgins, Blair, and Tashtoush (1990) will generate distributions as the ones in Figure 6. We observe that the distribution for the hard task is simply shifted to the right, while their shape conveniently remains identical. Mansouri and Chang (1995) have shown that at least for interaction effects, ART remains robust under such distributions. However, how realistic are these distributions?

Heavy-tailed distributions, such as the log-normal distribution here, commonly arise in nature when measurements cannot be negative or fall below a certain threshold (Limpert, Stahel, and Abbt 2001), e.g., the minimum time needed to react to a visual stimulus. In most experimental research, however, this threshold does not shift across conditions while preserving the distribution’s shape. Instead, distributions are more likely to resemble the ones in Figure 7.

In these distributions, the mean for hard tasks also increases, but this increase is not reflected as a simple global shift in the distribution. Instead, the overall shape of the distribution changes. The model we used to generate these distributions is structured as follows:

\[ log(Y_{ijk} - \theta) = \mu + \alpha_i + \beta_j + (\alpha \beta)_{ij} + \varepsilon_{ijk} \tag{3}\]

where \(\varepsilon_{ijk}\) is normally distributed, and \(\theta\) represents a threshold below which response values cannot occur. Unfortunately, ART’s alignment mechanism is not designed to handle such cases. The alignment calculations shown in Figure 3 include various mean terms that are sensitive to distribution differences across the levels of factors. In particular, the values of a random sample that appear in the tail of the right distribution can disproportionately influence the within-cell mean. This, in turn, leads to the exaggeration of all ranks within that cell, causing the method — as our results demonstrate — to confound effects.

We acknowledge that Elkin et al. (2021) diverged from the modeling approach described by Higgins, Blair, and Tashtoush (1990) and Mansouri and Chang (1995) and considered nonlinear models to evaluate ART. Yet, their experiments did not reveal that ART confounds effects, simply because they assessed Type I error rates only in scenarios where all main effects were null.

Heteroscedasticity issues

Myers et al. (2012) explain that “if the underlying distribution of the response variable is not normal, but is a continuous, skewed distribution, such as the lognormal, gamma, or Weibull distribution, we often find that the constant variance assumption is violated, and in such cases, “the variance is a function of the mean” (Pages 54-55). This pattern frequently occurs in studies measuring task-completion times, whether the task is visual, motor, or cognitive. As tasks become more difficult and prolonged, variance tends to increase. Similarly, slower participants generally exhibit greater variance across tasks compared to faster, more practiced users. This suggests that ART’s assumption of constant variance in skewed distributions is largely unrealistic.

Wagenmakers and Brown (2007) conducted an analysis of nine independent experiments to empirically investigate this pattern in response time distributions. They confirmed that standard deviations were proportional to the mean. The model we presented earlier (see Equation 3) is in line with these observations. Even when the standard deviation of the error term \(\varepsilon_{ijk}\) is constant, the standard deviation of observed log-normal distributions increases linearly with their mean. Figure 1 (right) shows this pattern in our illustrative example, where the standard deviation of time responses increases proportionally with task difficulty.

We will show that ART confounds effects under unequal variances regardless of whether the underlying distribution is skewed. Thus, the problem also arises under normal distributions.

Discrete distributions

As discussed earlier, Lüpsen (2017) found that ART frequently fails when responses are discrete. The problem becomes more pronounced for variables with few discrete levels and as the sample size increases. The author provides a detailed analysis of why this happens, summarized as follows:

“There is an explanation for the increase of the type I error rate when the number of distinct values gets smaller or the sample size larger: due to the subtraction of the other effects — a linear combination of the means — from the observed values even tiny differences between the means lead to large differences in the ranking” (Lüpsen 2017).

Figure 8 illustrates this problem using a simple dataset with a binary variable. In our example, all but one value are zero. Notice how dramatically ART alters the ranks and exaggerates the difference between the two levels of \(B\) (\(b_1\) vs. \(b_2\)). This issue persists even when a large number of participants are added and there is only a single value of 1 among thousands of 0s, causing the method to produce arbitrarily low p-values. Then, a single value variation (e.g., turning this 1 to 0) will cause ART’s results to drastically change. Although this example illustrates an extreme case, ART’s instability can manifest in various forms, inflating error rates even when responses span a wider range of discrete values.

4 Defining the null hypothesis of interest

Before comparing different statistical methods, it is essential to assess whether they are actually comparable. If two methods are not designed to test the same null hypothesis, then direct comparisons between them can be misleading. In what follows, we clarify the interpretation of main and interaction effects and explain how we address potential issues in our analysis.

Interpreting main effects

The traditional ANOVA is used to test differences between two or more means. However, nonparametric tests often target other population parameters. For example, the Wilcoxon sign-rank test is commonly described as a test of medians for paired samples (McDonald 2014) and is used when population means are not of interest, e.g., when population distributions are skewed. The Mann-Whitney U and the Kruskal–Wallis tests are used, instead, to assess whether two or more independent samples come from the same population, or more technically, whether the mean ranks of the groups are the same. They can be only interpreted as tests of medians under the strict assumption that the population distributions of all groups have identical shapes and scales (George W. Divine and Juarez-Colunga 2018).

Defining the null hypothesis of interest of a rank transformation is more challenging. Conover and Iman (1981) show that the simple rank transformation procedure (RNK) is equivalent to the Mann-Whitney U and Kruskal–Wallis tests for independent samples. For paired samples, however, it results in a new test, which is different from the Wilcoxon sign-rank test and the Friedman test. Defining the null hypothesis of interest of ART is even more challenging because of the hybrid nature of the method. In particular, while ART is a rank-based transformation procedure, it aligns data with respect to means, where alignment is performed independently for each group.

Dealing with interpretation issues. To avoid such interpretation issues, we focus — unless otherwise stated — on effects that apply monotonic transformations to population distributions. This also ensures a monotonic relationship between different measures of central tendency, such as medians and means (with the exception of the Cauchy distribution, where the mean is undefined). In other words, if a treatment increases the population mean, it will also increase the population median. We present an example in Figure 9. The figure shows two population distributions corresponding to the two intermediate levels of difficulty of our illustrative example (see Figure 1). We observe that the increased difficulty of the task translates both the population mean and the median to the right. In this case, we expect a statistical test to reject the null hypothesis, no matter whether it tests the population mean, the median, or the overall distribution shape.

Interpreting interaction effects

The ART procedure was proposed as an alternative to the rank transformation (Conover and Iman 1981) for testing interactions. As Higgins, Blair, and Tashtoush (1990) explained, the rank transformation is nonlinear and, as a result, it changes the structure of interactions. Therefore, “interaction may exist in the transformed data but not in the original data, or vice versa” (Higgins, Blair, and Tashtoush 1990). Figure 10 demonstrates the problem. In this example, the data have been sampled from perfectly normal distributions with equal variances. We observe that while no interaction effect appears in the original data (lines are parallel), the rank transformation deforms the trend. In particular, differences are more pronounced for the middle points of the three-level factor (“medium difficulty”). The figure also shows that the inverse normal transformation also deforms the interaction but to a lesser extent. Note that the problem emerges when the main effect is strong on all interacting factors.

ART aims to correct this problem. However, nonlinear transformations come into place in various ways in experimental designs (Loftus 1978; Wagenmakers et al. 2012). They can deform distributions, making the interpretation of observed effects especially challenging. Before presenting our experimental method, we discuss these problems and explain how our approach takes them into consideration.

Removable interactions. Let us take a different dataset from a fictional experiment (within-subject design with \(n = 24\)) that evaluates the performance of two techniques (Tech A and Tech B) under two task difficulty levels (easy vs. hard). The experiment, for example, could test a mobile typing task, where the levels of difficulty correspond to texts of different lengths (short vs. long) under two typing techniques (with vs. without auto-completion). We assume that the researchers measure two dependent variables: task-completion time and perceived performance, which is measured through a five-level ordinal scale (from “very quick” to “very slow”). In this example, the main effects of task difficulty and technique are large. It is less clear, however, whether there is also an interaction between the two factors.

Figure 11 visualizes the means for each combination of the levels of the factors and highlights the possible interactions. Let us first concentrate on the first two plots that present results for the time measure. The trends in the left plot indicate an interaction effect, since the two lines seem to diverge as the task difficulty increases.

But how meaningful is this interpretation of interaction? As we dicussed earlier, time measurements are often taken from skewed distributions where the variance is not constant. Therefore, large effects are harder to observe in quick tasks than in slow ones. However, such trends do not necessarily reveal any real interactions, because they are simply due to observations at different time scales. Figure 11 (middle) visualizes the effects using a logarithmic scale. Notice that the lines in the plot are now almost parallel, suggesting no interaction effect.

The concept of removable or uninterpretable interactions, that is, interactions that disappear after applying a monotonic nonlinear transformation, was introduced by Loftus (1978). Over three decades later, Wagenmakers et al. (2012) revisited this work and found that psychology researchers are largely unaware of the concept, drawing incorrect conclusions about psychological effects on the basis of meaningless interactions.

This issue also extends to data collected from questionnaires. The right plot in Figure 11 shows results for perceived performance. Again, the line trends suggest an interaction effect. Unfortunately, the scale is ordinal, which means that distances between the five levels of the scale may not be perceived as equal by people. Furthermore, the scale is bounded, so the reason that the two lines are not parallel might be simply due to the absence of additional levels beyond the extreme “very slow” ranking. Concluding that there is a meaningful interaction here could be incorrect. Liddell and Kruschke (2018) extensively discuss how ordinal scales deform interactions.

Formal testing. We now formally test the above interactions by using ANOVA with different transformation methods. Below, we present the p-value returned by each method for task-completion time:

| PAR | LOG | ART | RNK | INT |

|---|---|---|---|---|

| \(.023\) | \(.67\) | \(.00073\) | \(.66\) | \(.67\) |

We observe that RNK and INT lead to p-values very close to the p-value of LOG, which suggests a similar interpretation of interaction effects. In contrast, ART returns a very low p-value (lower than the p-value of the regular ANOVA), indicating a different interpretation.

We also test the interaction effect on the ordinal dependent variable:

| PAR | ART | RNK | INT | ATS |

|---|---|---|---|---|

| \(.0020\) | \(.00075\) | \(.0067\) | \(.0037\) | \(.0081\) |

Notice that we omit the log-transformation method (LOG), as it is not relevant in this context. Instead, we conduct an analysis with the nonparametric ATS method (Brunner and Puri 2001), as implemented in the R package nparLD (Noguchi et al. 2012). All p-values are low, suggesting that an interaction effect exists. However, if we conduct a more appropriate analysis using an ordered probit model (Bürkner and Vuorre 2019; Christensen 2023), we find no supportive evidence for such an effect (check our analysis in the supplementary material).

In conclusion, focusing solely on the issues with the rank tranformation illustrated in Figure 10 is akin to missing the forest for the trees. When parallel main effects are present, interpreting interactions can be challenging for all methods — and ART offers no solution to this problem.

Disagreement on the interpretation of effects in GLMs

We further discuss interpetation issues in the broader context of generalized linear models (GLMs).

GLMs are defined by a link function \(g\) that connects a variable — linearly defined by the predictors — to a response variable \(Y\), which is assumed to be generated from a distribution in an exponential family. Exponential families include a wide range of common distributions, such as the normal, log-normal, exponential, binomial, and Poisson distributions.

For an experimental design with two factors, \(x_1\) and \(x_2\), the expected value (or mean) of \(Y\) conditional on \(x_1\) and \(x_2\) is expressed as follows: \[ E[Y|x_1, x_2] = g^{-1}(a_0 + a_1 x_1 + a_2 x_2 + a_{12}x_1 x_2) \tag{4}\] where \(g^{-1}\) is the inverse of the link function.

Statistical procedures based on GLMs define effects in terms of the coefficients \(a_{1}\), \(a_{2}\), and \(a_{12}\) in the linear component of the model. That is, the null hypothesis should be rejected when the corresponding coefficient (e.g., the coefficient \(a_{12}\) for the interaction) is non-zero. However, there is no consensus among researchers on this approach. We clarify the debate and explain how we address it.

Defining interactions on the scale of the response variable. Ai and Norton (2003) and, more recently, McCabe et al. (2022) show that the interaction coefficient alone is not sufficient to describe the actual interaction trend on the natural scale of the response variable. They argue that an interaction effect should instead be defined as the observed change in the marginal effect of \(x_1\) as a function of \(x_2\). Thus, the trends shown in Figure 11 do represent interactions, since the difference between Tech A and Tech B changes as a function of Difficulty on the original reponse scale (time or perceived performance).

Using this definition, the authors show that a zero interaction coefficient (\(a_{12} = 0\)) does not necessarily imply the absence of an interaction effect. In fact, for continuous predictors, they demonstrate that when \(a_{12} = 0\), the interaction effect can still emerge and is proportional to \(a_1\) and \(a_2\).

Responding to Ai and Norton (2003). Greene (2010) responds that the interpretation of Ai and Norton (2003) “produces generally uninformative and sometimes contradictory and misleading results” and instead advocates for using the model’s coefficients as the basis for inference:

“Statistical testing about the model specification is done at this step. Hypothesis tests are about model coefficients and about the structural aspects of the model specifications. Partial effects are neither coefficients nor elements of the specification of the model. They are implications of the specified and estimated model” (Greene 2010, 295).

Our position is fully aligned with Greene’s argument. As our earlier example (see Figure 11) demonstrates, interactions assessed on the scale of the response variable can be misleading about the true underlying effects that generated the data. How meaningful is it to declare interaction effects that arise solely from parallel main effects and lack any theoretical interpretation? It is worth noting that Ai and Norton (2003), as well as McCabe et al. (2022), overlook Loftus’ (1978) critique of uninterpretable interactions.

Nevertheless, in most of our experiments we select scenarios that avoid these interpretation problems, ensuring that the Type I error rates of the methods we compare do not depend on how one defines interactions. We clarify this point below.

Clarifying when the interpretation of effects becomes ambiguous. In nonlinear models, ambiguities in interpreting interaction effects arise when all relevant main effects are present — that is, when the coefficients of all interacting factors are non-zero. Interpretation issues may also extend to main effects when the interaction coefficient \(a_{12}\) is non-zero. In such cases, the marginal effect of a factor (e.g., \(x_1\)) may vary across levels of another factor even when its associated coefficient (e.g., \(a_1\)) is zero.

By contrast, interpretation is unambiguous in the following situations:

Main effects, when the interaction coefficient \(a_{12}\) is zero. In this case, if \(a_1 = 0\), then the factor \(x_1\) has no effect on the response scale. Likewise, if \(a_2 = 0\), then the factor \(x_2\) has no effect on the response scale.

Interaction effect, when either \(a_{1}\) or \(a_{2}\) is zero. In this case, if \(a_{12}=0\), then there is no interaction between \(x_1\) and \(x_2\) on the response scale.

Proofs of these statements are provided in Appendix II. In these scenarios, error rates are comparable across methods, regardless of whether the null hypothesis is defined in terms of the coefficients of the linear model or the observed effects on the response scale. For example, the divergence in ART’s results in our illustrative example (see Figure 1) cannot be attributed to interpretation issues, since the sample was drawn from a population with no effect of Technique (\(a_{1} = 0\)) and no interaction (\(a_{12}=0\)).

Throughout the remainder of the article, we explicitly distinguish between situations in which effect interpretation is unambiguous and those in which it is not. Because different methods may rely on different assumptions about the null hypothesis, we analyze ambiguous cases separately. However, we emphasize that the existing literature offers no guidance on how ART should be expected to behave when the definition of a main or interaction effect is ambiguous. Early evaluations of ART focused on linear models. Although Elkin et al. (2021) consider nonlinear models, they do not address how main and interaction effects should be interpreted when the definition of the null hypothesis depends on the scale of responses.

5 Experimental method

We can now detail our experimental method. We evaluate the standard parametric approach (PAR) and the three rank-transformation methods (RNK, INT, and ART) that we introduced earlier. We conduct a series of Monte Carlo experiments that assess their performance under a variety of experimental configurations.

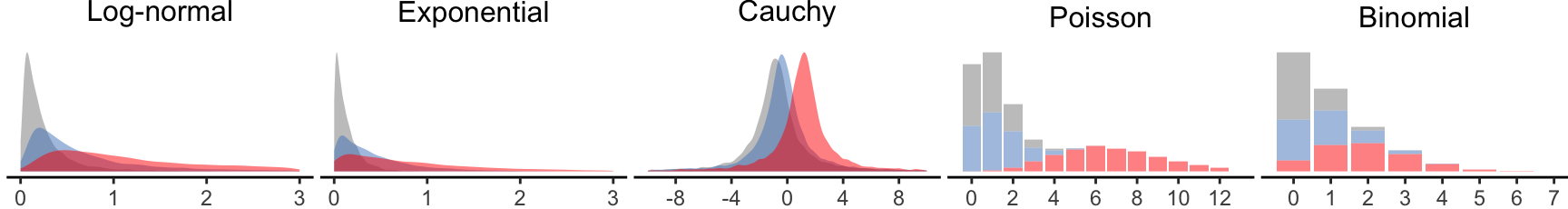

Distributions. We evaluate both ratio and ordinal data, drawn from the following distributions:

- Normal distribution \(\mathcal{N}(\mu, \sigma^2)\), where \(\mu\) is its mean and \(\sigma\) is its standard deviation. For most experiments where variances are equal, we set \(\sigma = 1\).

- Log-normal distribution \(\mathcal{LogN}(\mu, \sigma^2)\), where \(\mu\) is its mean and \(\sigma\) is its standard deviation at the logarithmic scale. The log-normal distribution is a good model for various measures bounded by zero, such as task-completion times. We set \(\sigma = 1\) in our main experiments but evaluate a wider range of parameters in Appendix I.

- Exponential distribution \(Exp(\lambda)\), where \(\lambda\) is its rate. The exponential distribution naturally emerges when describing the time elapsed between events. For example, we could use it to model the time a random person spends with a public display, or the waiting time before a new person approaches to interact with the display, when the average waiting time is \(\frac{1}{\lambda}\).

- Cauchy distribution \(\mathcal{Cauchy}(x_0, \gamma)\), where \(x_0\) defines its location (median) and \(\gamma\) defines its scale. The Cauchy distribution is the distribution of the ratio of two independent normally distributed random variables. It rarely emerges in practice. However, it is commonly used in statistics to test the robustness of statistical procedures because both its mean and variance are undefined. As we discussed earlier, past evaluations of ART (Mansouri and Chang 1995; Elkin et al. 2021) show that the method fails under the Cauchy distribution. We set \(\gamma = 1\).

- Poisson distribution \(\mathcal{Pois}(\lambda)\), where \(\lambda\) is its rate. It expresses the probability of a given number of events in a fixed interval of time. For example, we could use it to model the number of people who interact with a public display in an hour, when the average rate is \(\lambda\) people per hour.

- Binomial distribution \(\mathcal{B}(\kappa, p)\), where \(\kappa\) defines the number of independent Bernoulli trials and \(p\) is the probability of error or success of each trial. It frequently appears in HCI research, as it can model the number of successes and failures in a series of experimental tasks. We set \(\kappa = 10\) in our main experiments but evaluate a wider range of parameters in Appendix I.

- Distributions of Likert-item responses with 5, 7, and 11 discrete levels.

Experimental designs. We present results for five experimental designs. To simplify our presentation, we start with (i) a 4 \(\times\) 3 within-subject factorial design. We then show how our conclusions generalize to four additional designs: (ii) a 2 \(\times\) 3 between-subject design; (iii) a 2 \(\times\) 4 mixed design, with a between-subject factor and a within-subject factor; (iv) a 2 \(\times\) 2 \(\times\) 2 within-subject design; and (v) a 3 \(\times\) 3 \(\times\) 3 within-subject design.

Sample sizes. We focus on three sample sizes, \(n=10\), \(n=20\), and \(n=30\), where \(n\) represents the cell size in an experimental design. However, for certain scenarios, we also report results for larger sample sizes, up to \(n = 512\). In within-subject designs, where all factors are treated as repeated measures, \(n\) corresponds to the number of subjects \(N\) (commonly human participants in HCI research). In contrast, in a 2 \(\times\) 3 between-subject design, a cell size of \(n = 20\), implies a total of \(N = 120\) subjects.

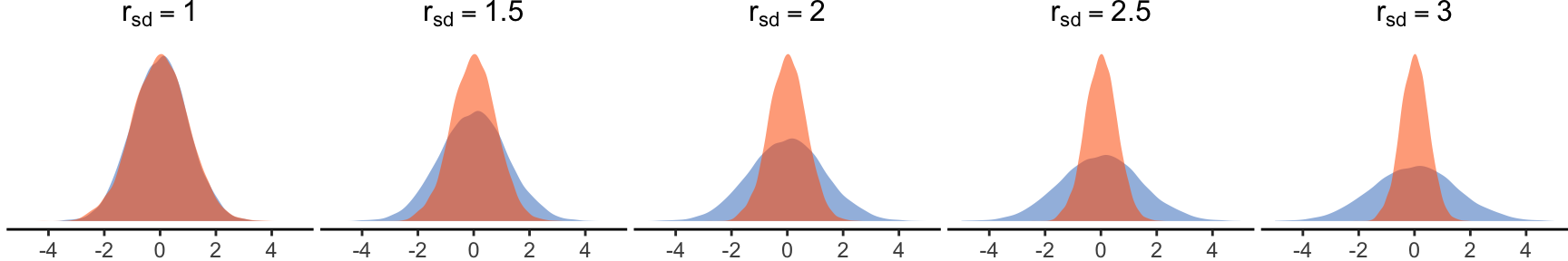

Equal vs. unequal variances. For normal and ordinal distributions, we test the robustness of the methods when variances are unequal.

Evaluation measures. In addition to Type I error rates, we compare the statistical power of the methods and compare their effect size estimates with ground-truth estimates.

Magnitude of effects. We analyze both main and interaction effects, examining how increasing the magnitude of effect of one factor influences the Type I error rates of other factors and their interactions. In addition, we examine how increasing two main effects in parallel influences the Type I error rate of their interaction, while emphasizing that the definition of the null hypothesis in this case is ambiguous.

Previous evaluations of rank transformation methods (Beasley, Erickson, and Allison 2009; Lüpsen 2018) have also examined unbalanced designs, where cell sizes vary across the levels of a factor. When combined with unequal variances, such designs often pose challenges for both parametric procedures (Blanca et al. 2018) and rank transformation methods (Beasley, Erickson, and Allison 2009; Lüpsen 2018). As noted earlier, we do not consider unbalanced designs in our main article. However, we provide additional experimental results on missing data in Appendix I.

Statistical modeling

For simplicity, we explain here our modeling approach for two factors, but its extension to three factors is straightforward.

Linear component. The base of our data generation process for all distributions is the following linear predictor:

\[ \ell_{ijk} = \mu + s_k + a_1 x_{1i} + a_2 x_{2j} + a_{12} x_{1i} x_{2j} \tag{5}\]

\(\mu\) is the intercept

\(s_k \sim \mathcal{N}(0, \sigma_s^2)\) is the random intercept effect of the k-th subject, where \(k = 1..n\)

\(x_{1i}\) is a numerical encoding of the i-th level of factor \(X_1\), where \(i = 1..m_1\)

\(x_{2j}\) is a numerical encoding of the j-th level of factor \(X_2\), where \(j = 1..m_2\)

\(a_1\), \(a_2\), and \(a_{12}\) express the magnitude of main and interaction effects

While random slope effects can have an impact on real experimental data (Barr et al. 2013), we do not consider them here for two main reasons: (1) to be consistent with previous evaluations of the ART procedure (Elkin et al. 2021); and (2) because mixed-effects procedures with random slope effects are computationally demanding, adding strain to simulation resources. However, there is no good reason to believe that adding random slope effects would impact our findings and conclusions.

Encoding the levels of factors. To encode the levels of the two factors \(x_{1i} \in X_1\) and \(x_{2j} \in X_2\) we proceed as follows:

We normalize the distance between their first and their second levels, such that \(x_{12} - x_{11} = 1\) and \(x_{22} - x_{21} = 1\). This approach enables us to conveniently control for the main and interaction effects by simply varying the parameters \(a_1\), \(a_2\), and \(a_{12}\).

For the remaining levels, we randomly sample from a uniform distribution that spans the range between these two extreme levels, i.e., between \(x_{11}\) and \(x_{12}\) for \(X_1\), and between \(x_{21}\) and \(x_{22}\) for \(X_2\). This approach allows us to generate and evaluate a substantial variety of configurations, each representing different relative effects between levels.

We require all levels to sum up to 0, or \(\sum\limits_{i=1}^{m_1} x_{1i} = 0\) and \(\sum\limits_{j=1}^{m_2} x_{2j} = 0\), which ensures that the grand mean is \(\mu\).

For example, we can encode a factor with four levels as \(\{-.6, .4, .1, .1\}\) or as \(\{-.5, .5, .3, -.3\}\).

Generating ratio data. We generate ratio-scaled data using generalized linear modeling (Bolker et al. 2009). Specifically, we use an inverse link function \(g^{-1}\) to relate the linear predictor \(\ell_{ijk}\) to the location parameter of the target distribution:

\[

Y_{ijk} \sim \mathcal{F}(location=g^{-1}(\ell_{ijk}), ...)

\tag{6}\]

where \(\mathcal{F}\) denotes the probability density (or mass) function from which the responses \(Y_{ijk}\) are drawn. For example, the mean of the exponential distribution is equal to \(1 / \lambda\), where \(\lambda\) is its rate parameter. This mean is linked to the linear predictor through the inverse link function \(g^{-1}(x) = e^{x}\), which implies \(\lambda = e^{-\ell_{ijk}}\). Similarly, for the binomial distribution, the canonical link function is the logit function, whose inverse is the logistic function \(g^{-1}(x) = 1 / (1 + e^{-x})\).

Table 1 summarizes how the location parameters of all the distributions are linked to the linear predictor. We emphasize that the Cauchy distribution does not define a generalized linear model (because it does not belong to an exponential family) but we nevertheless use a model that links its location (the median) to the linear predictor via the identity link.

| distribution | location parameter | description |

|---|---|---|

| Normal | \(\mu = \ell_{ijk}\) | mean |

| Log-normal | \(\mu = \ell_{ijk}\) | mean at logarithmic scale (logarithm of median) |

| Exponential | \(\lambda = e^{-\ell_{ijk}}\) | rate (1 / mean) |

| Cauchy | \(x_0 = \ell_{ijk}\) | median |

| Poisson | \(\lambda = e^{\ell_{ijk}}\) | rate (mean) |

| Binomial | \(p = 1 / (1 + e^{-\ell_{ijk}})\) | probability of success or error |

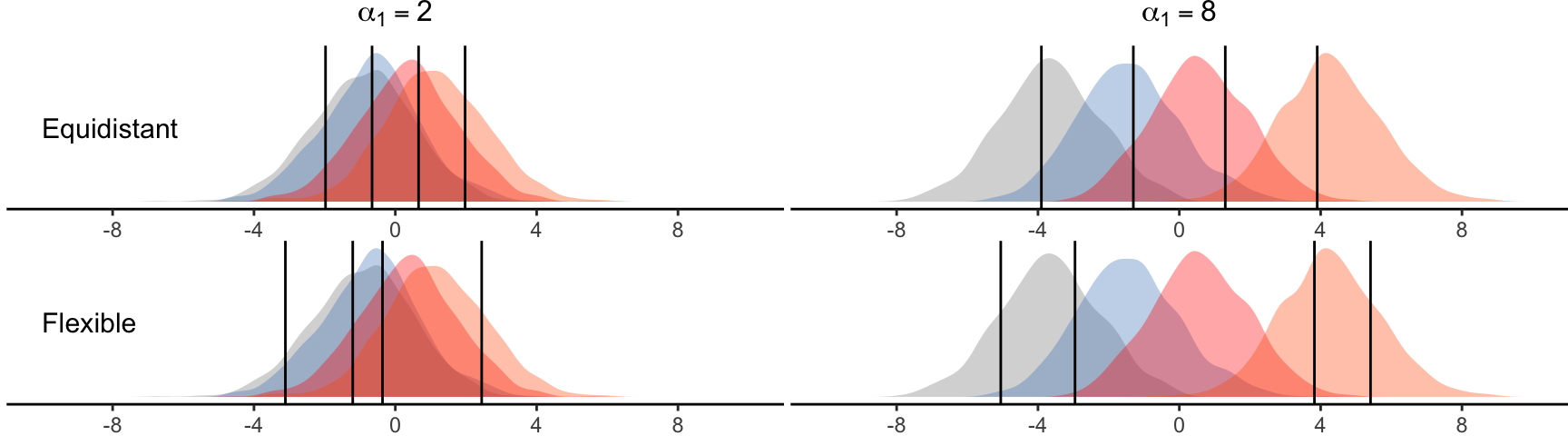

Generating ordinal data. For generating ordinal data, we follow a latent-variable approach as explained by Liddell and Kruschke (2018). Specifically, we consider that there is a continuous latent variable that follows a normal distribution: \[ Y_{ijk}^* \sim \mathcal{N}(\mu = \ell_{ijk}, \sigma^2 = 1) \tag{7}\] From this, we derive discrete responses \(d = 1,2 ... D\) by applying fixed thresholds \(\tau_d \in (-\infty, \infty)\) as follows: \[ \begin{aligned} Y_{ijk} = d\ \ \text{if}\ \ \tau_{d-1} < Y_{ijk}^* \leq \tau_d \end{aligned} \tag{8}\] Since, in practice, \(\tau_0 = -\infty\) and \(\tau_D = \infty\), generating responses with \(D\) discrete levels requires \(D-1\) thresholds.

Effects and population control

We next detail how we select the parameters of the linear component (see Equation 5) for the various distributions, as well as the thresholds of the ordinal model (see Equation 8).

Magnitude of effects. We control the magnitude of effects by varying \(a_1\), \(a_2\), and, for some experiments (those evaluating power for interactions), \(a_{12}\). Figure 12 presents normal distributions (\(\sigma = 1\)) generated across the range of values of \(a_1\) used to evaluate Type I error rates. In these examples, we illustrate effects for a factor with three categorical levels and, for clarity, disregard between-subject variability (\(\sigma_s = 0\)).

Intercept and variance parameters. Fixing the intercept \(\mu\) directly in a nonlinear model generally leads to arbitrary population-level response distributions, because the marginal mean depends on the link function, the response distribution, the variance of the random effects, and the magnitude of the fixed effects. We therefore calibrate the intercept \(\mu\) so that the induced location of the responses matches a pre-specified target value. Table 2 reports the target location for each distribution, which is typically the overall mean in all cases except for the Cauchy distribution, where the median is used. We detail how \(\mu\) is analytically derived from these target values in Appendix II.

| Normal | Log-normal | Exponential | Cauchy | Poisson | Binomial | Ordinal |

|---|---|---|---|---|---|---|

| 0 | 1 | 0.5 | 0 | 3 | 0.1 (per trial) | 0 |

Figure 13 shows distributions generated with these location values when \(a_1 = 2\) for a factor with three categorical levels.

In practice, we randomly choose the standard deviation \(\sigma_s\) — which determines between-subject variability — from the interval \([0.1, 0.5]\), drawing each value from a uniform distribution.

Thresholds for ordinal scales. For ordinal data, we evaluate \(D =\) 5, 7, or 11 discrete levels. To derive the discretization thresholds \(\tau_d\), we first consider the range \([-2SD, 2SD]\) , where \(SD\) is the overall standard deviation of the latent variable \(Y^{*}_{ijk}\). We then divide this range into 5, 7, or 11 intervals, following two different strategies: (i) setting thresholds to be equidistant; or (ii) considering flexible thresholds, randomly drawing their position in the above range.

Figure 14 presents examples of equidistant and flexible thresholds for a 5-level scale when the magnitude of main effect of \(X_1\) is either \(a_1 = 2\) or \(a_1 = 8\), while all other effects are zero.

Implementation of rank transformation methods

For the aligned rank transformation (ART), we use the R implementation of ARTool v0.11.1 (Kay et al. 2021). For the pure rank transformation (RNK), we use R’s rank() function. We use the Rankit formulation (Bliss, Greenwood, and White 1956) for the inverse normal transformation (INT), as explained earlier.

Unless stated otherwise, we analyze the data using ANOVA with R’s aov() function and the model specification Y' ~ X1 * X2 + Error(S). For balanced designs, this approach yields results that are identical or nearly identical to those obtained using R’s lmer() function with the model specification Y' ~ X1 * X2 + (1 | S).

Evaluation measures

Significance tests have two types of errors. Type I errors, or false positives, are mistaken rejections of the null hypothesis. Type II errors, of false negatives, are failures to reject a null hypothesis that is actually true. In our illustrative example in Figure 1, a Type I error is finding that there is an effect of the choice of the technique on time performance. A Type II error, in turn, is finding that the task difficulty has no effect on time performance.

Statistical significance testing requires setting a significance threshold known as significance or \(\alpha\) (alpha) level, with typical values \(\alpha = .05\) and \(\alpha = .01\). The Type I error rate of a well-behaved significance test should be close to this nominal alpha level. An error rate clearly above this level suggests that the significance test is too liberal, while an error rate clearly below this level suggests that the test is too conservative. We test two significance levels: \(\alpha = .05\) and \(\alpha = .01\). For brevity, we report only the results for \(\alpha = .05\) and include additional results in Appendix I and our supplementary material.